Scientific Papers: What is ‘Reproducible’, and Does it Matter?

This scientific step is required to test in situ how the findings of published papers perform in real life on the occasion of repeated experiments. The paradox is that even transparent, accessible experiments may not be reproducible while enforced as if they were. Two illustrations but keep in mind it is all a bit more complicated.

An expert in AI and ML recently posted on LinkedIn that they created a website that lists, and denounces, all types of pseudo-official research in this field that can’t be verified and reproduced. My suggestion was to focus on what can be, as it would save us all a lot of time – without any sarcasm behind this suggestion.

I started by asking what reproducible means, which is not such a simple question as it could look like from a distance.

The difference between reproducibility and replicability.

“Reproducibility” refers to independent researchers reaching the same conclusions using their own methods. Replicability refers to a different team reaching the same results using the original author’s artefacts.

Indeed, in modelling, AI and/or ML, you have specific code, algorithms, data and hardware involved.

You can therefore end up with black-box logic, as authors may not want to provide enough information for others to test, check etc. i.e. appropriate the findings which have strong commercial application potential.

This is risk management in communication and protection of business secrets. Fair enough, but.

There are also chaotic systems, which may not be easy to replicate in some cases – it is a complex topic (say how fire or an explosion behave, or how some species could reproduce etc.).

So, what is reproducible, and what conditions should be met to say this can and should be reproducible, can be tricky.

“Reproducibility” refers to independent researchers reaching the same conclusions using their own methods. Replicability refers to a different team reaching the same results using the original author’s artifacts.

For instance, and essentially, we can have two types of research/studies:

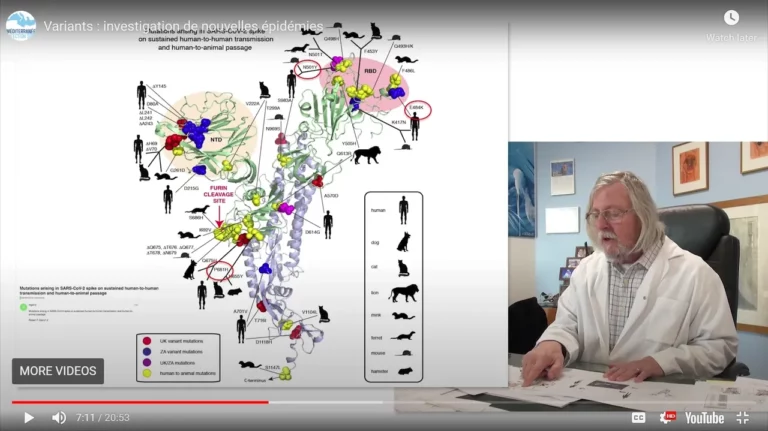

1) studies with all in our hands available for testing and control like last year’s Fergusson’s falsely alarming 13 years old buggy, totally unreliable Covid code that led to lockdown 1

2) studies with very little available to look scientifically at such as Google’s recent publication in Nature describing successful trials of an AI that looked for signs of breast cancer in medical images

In both above cases, researchers and data scientists can’t reproduce the findings, but in the first case, all information is and was available to all and, therefore, before the first lockdown was baselessly imposed.

One could say Fergusson’s alarmist projections were wrong before even his research was started: the worm was in the apple. Nothing could be reproduced, and many tried unless you want to film a worm getting fatter and fatter by destroying the fruit. Nevertheless, it’s been taken for more than God’s word by the UK Government. This modus operandi was replicated three times, for the three lockdowns, about which none of the research and analysis provided could be reproduced.

It is impressive to note Fergusson has for a few decades now a remarkable track record of both unreproducible and unreplicable forecastings/modelling, while the replicability of his assignments reaches a staggering reproducibility of soaking the taxpayer – not assessing the damage caused yet.

In the case of Google, researchers can’t access to all the data and parameters required to evaluate the publication. How Nature’s Reading Committee could therefore conclude that the paper can be published?

Some suggested it could be a promoted article using a prestigious revue. If so, it is leading to a pattern we witnessed all with the LancetGate and Surgisphere analytics scandal. But if Google has really achieved the published results, it is a huge progress for health and science while on the other hand raising the question of how we assess research and its commercial applications while articulating both full transparency and full intellectual property.

Should you wish to learn more:

Standford University: Reproducible research, the hunt for the truth

Institut HospitalierUniversitaire (IHU), Marseille, France: “Why most of published results are false”

Thank you for your time and interest.

Please join us by subscribing to our Blog. Posts are occasional and written as thoughts come.

Please leave your comment at the bottom of this page to continue the reflection on this post.

If you are looking for a reliable, independent professional consultancy to assist you in getting through the mist and the storm and cutting through an often artificial complexity, please do get in touch with us for an informal discussion, or write to contact @ reasonmakesense .com (please remove spaces)

Get in touch to discuss freely; reason will Make Sense, with you and for you.

A few words about Reason

reason supports Shareholders, Board, C-Suite Executives and Senior Management Team in achieving Business Excellence and Sustainability through our Praxis unique approach.

We Make Sense with you and for you.

We work and think with integrity, are independent and fed by a very broad spectrum of robust information sources, which is certainly one of the rarest and best qualities a consultancy can offer demanding decision-makers willing to overcome challenges and reach impactful, tangible and measurable Business Excellence.

We follow reason, facts, best practices, common sense and proper scientific approaches. This is our definition of professionalism. It brings reliability, confidence and peace of mind.

Please check our offering, subscribe directly on this page, write to contact @ reasonmakesense .com (without the spaces) or click on our logo below to get redirected to our contact form.

Thank you for reading!

Reason Praxis | Make Sense

Excellence & Sustainability

www.reasonmakesense.com

There is nothing wrong in doing things right, first time.

Share this post